Pentagon-Anthropic AI Military Dispute: What Defense Contract Tensions Mean for Security Technology

February 4, 2026

Pentagon-Anthropic AI Military Dispute

- Pentagon officials express frustration with Anthropic's restrictions on military applications of their Claude AI system

- The dispute centers on acceptable use policies that limit defense and security deployments of commercial AI technology

- This conflict reflects broader tensions between AI companies' ethical guidelines and government security requirements

- The outcome could reshape how defense agencies procure and deploy AI-powered security solutions

Inside the Pentagon-Anthropic Contract Dispute

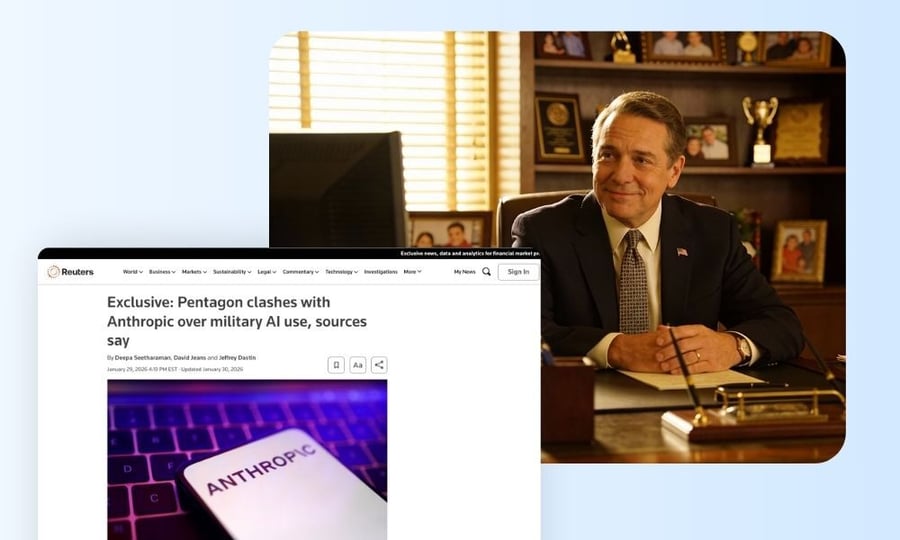

The U.S. Department of Defense has found itself in a significant disagreement with the AI company Anthropic over the military applications of artificial intelligence. According to an exclusive Reuters report, tensions have emerged over Anthropic's acceptable use policies, which restrict how its Claude AI system can be deployed in military and defense contexts.

Pentagon sources indicate frustration with limitations that prevent full utilization of AI capabilities for national security applications. The San Francisco-based AI company, founded by former OpenAI executives, has maintained strict ethical guidelines around military use of its technology.

This dispute highlights the growing challenge that defense agencies face in leveraging commercial AI innovations. Anthropic has positioned itself as a safety-focused AI company, emphasizing responsible development and deployment of large language models.

The conflict comes as the Pentagon increasingly seeks to integrate advanced AI capabilities across defense operations. Military leaders view artificial intelligence as critical for maintaining technological superiority and enhancing security infrastructure.

Why Pentagon AI Procurement Challenges Matter for Security Technology

This high-profile dispute signals broader implications for how government agencies and security organizations access cutting-edge AI technology. When commercial AI companies impose restrictive use policies, it creates procurement gaps that affect everything from threat detection to emergency response systems.

A misalignment between policy providers and implementers can delay critical security infrastructure upgrades and limit access to the most advanced detection capabilities.

The Pentagon's experience with Anthropic reflects the challenges faced by state and local agencies seeking AI-powered security solutions. Many commercial AI providers maintain policies that restrict or complicate government and security applications, forcing agencies to seek specialized alternatives.

Security leaders should expect continued fragmentation in the AI market between general-purpose commercial systems and purpose-built security applications. This divide will likely accelerate the development of AI solutions specifically designed for defense and security use cases.

Procurement Strategy Lessons from the Pentagon-Anthropic Conflict

Security organizations can learn valuable lessons from this high-profile contract dispute.

- Acceptable use policies should be thoroughly reviewed before committing to any AI platform or service agreement.

- Agencies must evaluate whether commercial AI restrictions align with their operational requirements.

- Organizations should prioritize AI solutions purpose-built for security applications rather than adapting general-purpose systems.

Long-term technology strategies should account for the evolving realities of AI availability and restrictions. Building relationships with providers who understand security requirements can prevent costly delays and operational limitations.

VOLT's AI detection system has been specifically designed for security applications, helping institutions identify threats in under 3 seconds without the deployment restrictions that affect general-purpose AI systems.

Want to understand how AI-powered weapon detection can transform your security operations? Explore more at our resource center.